Inside the far-right disinformation networks that fuelled the UK riots

Unchecked social media platforms provide the perfect environment for Islamophobic anti-immigrant groups to organise and recruit

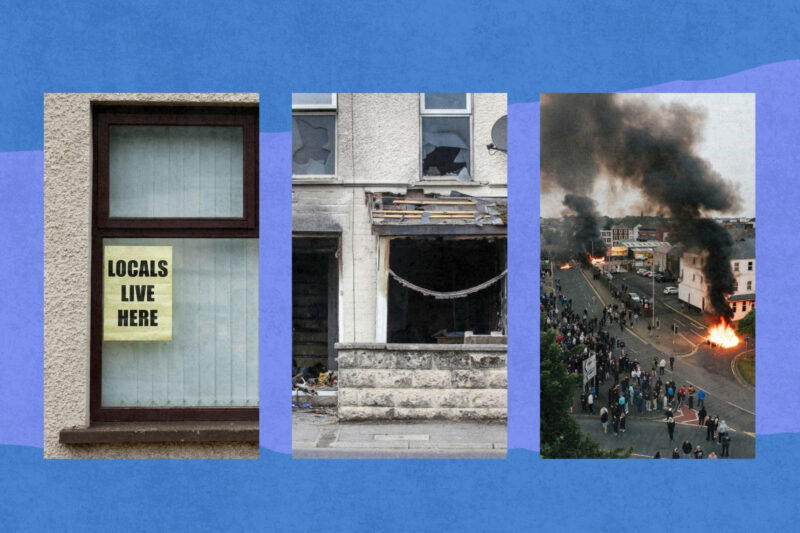

On 30 July, a large group gathered outside Southport mosque in Merseyside. The day before, an assailant had entered a Taylor Swift-themed dance class in the town and stabbed several children and two adults. Three of the young victims died. Owing to the age of the suspect, police could not immediately identify him, but online speculation was rife that he was an undocumented Muslim immigrant. In response, the crowd began to direct its anger towards the mosque, hurling bricks and rocks. A police van was set on fire and 39 officers were injured.

In the following days, the violence spread. Manchester, Sunderland, Middlesbrough, Hartlepool, Portsmouth, Belfast and more. Far-right mobs launched attacks on centres and hotels housing people seeking asylum. In Rotherham, South Yorkshire, one tried to set a Holiday Inn Express on fire. Even after the lifting of reporting restrictions regarding the Southport suspect’s identity — which revealed that he was 17 and born in Cardiff to non-Muslim, Rwandan parents — the turmoil continued. Fuelled by online misinformation, the UK experienced its worst unrest for more than a decade.

As soon as news of the stabbings broke, prominent rightwing figures advanced uncorroborated theories regarding the motivations and background of the attacker, but did not offer a name. Andrew Tate, a notoriously misogynistic influencer who recently claimed to have converted to Islam, posted that an undocumented immigrant was responsible.

Rightwing activist Laurence Fox, who has more than half a million followers on X, posted: “Enough of this madness now. We need to permanently remove Islam from Great Britain. Completely and entirely.” Other pundits, such as GB News presenter and Brexit campaigner Darren Grimes, linked the attack to government refugee policy.

Among the loudest voices was Stephen Yaxley-Lennon — also known as Tommy Robinson — founder of the now-disbanded far-right English Defence League (EDL). On X, he accused the authorities of “managing” the public response to the stabbings: “Their bodies are barely cold, others fighting for their lives in hospital. And their goal is to manipulate us!” On 30 July, he posted suggesting that the attack was linked to Islam.

Disinformation expert Marc Owen Jones, an associate professor at Northwestern University in Qatar, believes that figures such as Yaxley-Lennon follow a well-established playbook when responding to events such as the Southport killings. “It feels like there’s a pattern to it,” he said. “You lay the seeds in a particular order. You don’t come straight out with a name. You set your stall out and sort of lead people down this logical path of speculation that has one inevitable conclusion.”

Rumours and questioning of the official line began just hours after the stabbings. One of the first posts on social media containing inaccurate claims about the attacker was made at 4.49pm on July 29, when anti-lockdown activist Bernadette Spofforth circulated a screenshot from LinkedIn that appeared to show a message from a parent whose children had been at the scene of the stabbings.

The screenshot said that the attacker was an immigrant and called for the complete closure of UK borders. Spofforth blamed “Ali al-Shakati”, who she claimed was an asylum seeker “who came to the UK by boat last year”, was known to mental health services and was on a security service watch list.

That fabricated name tore across the internet like wildfire, although Spofforth — who eventually deleted her post — has told the Daily Mail she was not the first person to share it. Cheshire police have subsequently arrested and bailed a woman on suspicion of stirring racial hatred and spreading false information.

Later on 29 July, Channel3 Now, a pseudo-news account on X with fewer than 3,500 followers, included the name al-Shakati in an article. So did the X account End Wokeness, which has 2.8 million followers. According to the Institute for Strategic Dialogue, by 3pm the next day “al-Shakati” had received more than 30,000 mentions on X by more than 18,000 unique accounts. As quickly became clear, when enough people post something online, truth no longer matters.

“It becomes a narrative,” said Jones. “What we’re getting now are people within this context super-charging these rumours.” By way of example, Jones points to Europe Invasion, another pseudo-news account on X that took up the narrative, which he said “is clearly using manipulation and its figures are insane”.

Although Europe Invasion’s account information states that it was established in 2010, all posts before February 2024 have been deleted. Since then, it has produced a stream of Islamophobic, anti-immigrant posts. They often start with a siren emoji and “BREAKING” in the style of a legitimate news outlet, and its profile image includes the slogan “News from Europe” over a circle of yellow stars on a blue background, reminiscent of the EU flag. It now has more than 43,500 followers and regularly receives thousands of likes and retweets. Since 27 July, it has racked up more than 130 million impressions.

Just a small number of slickly curated pseudo-news accounts such as Europe Invasion can wield huge influence in the aftermath of a high-profile attack. One day before the Southport killings, false rumours circulated on social media after the fatal attack on Anita Rose, 57, in Suffolk, stating the suspects were Somalian. That narrative was amplified by Europe Invasion and the personal X account of Ashlea Simon, co-leader of far-right party Britain First. Earlier in July, anti-Muslim speculation about the unrest in the Harehills area of Leeds followed a similar pattern.

Some prominent online figures opt for a more subtle approach. Rather than making and amplifying demonstrably false claims, they “just ask questions”. Take, for instance, Nigel Farage, Reform UK leader and MP for the Essex constituency of Clacton. Just after 5.30pm on July 30, he posted a video on X about the Southport killings, wondering aloud whether “the truth was being withheld” from the public. That night, the violence began.

Farage’s comments drew widespread criticism from politicians, the media and former counter-terrorism police chief Neil Basu, who stated: “Nigel Farage is giving the EDL succour, undermining the police, creating conspiracy theories, and giving a false basis for the attacks on the police.”

While X played a significant part in the public spread of fake news and anti-Muslim rhetoric, another platform made an equally powerful but less immediately visible contribution.

Soon after the killings, a chat group titled Southport Wake Up launched on Telegram, a messaging app and social network popular with the far right. The group’s creator, a user named Stimpy, posted false information on the incident and racist propaganda to a rapidly growing number of subscribers. The location of the mosque was also shared. Calls for a protest were then shared by far-right figures including Daniel Thomas, a close associate of Yaxley-Lennon.

Early on, Al Baker, managing director of the open-source research company Prose Intelligence, noticed that many of the Telegram groups he monitors — including those based in conspiracy theory and extreme rightwing ideology — contained posts that speculated that the Southport attacker would be a Muslim immigrant.

The aim of Southport Wake Up and subsequent similar groups appeared to be to channel the anger of such individuals into organised unrest and, later, possibly lead them into a deeper relationship with the far right. Baker refers to a number of extreme groups involved in the proliferation of online disinformation during the riots as as “neo-Nazi accelerationists who probably will have seen people trying to set fire to that hotel and thought, ‘Wow, all our dreams have come true, this is how we’re going to start the race war that we want.’”

According to Baker, both Telegram and X proved central to the flow of disinformation simply because they are the online platforms that far-right communities have been allowed to operate on. While that is a longstanding situation for Telegram, it is a more recent development on X and can be directly linked to the unblocking of previously banned accounts — including those of Yaxley-Lennon and Britain First — that followed the 2022 takeover of Twitter by Elon Musk.

“The networks, the community, the infrastructure was already there to spread this around quickly, to make sure it was picked up, to make sure it was impactful,” Baker said. “The kinds of activity we saw on both X and Telegram are a foreseeable consequence of allowing these kinds of communities to have a safe haven on these platforms.”

As the riots continued, Telegram drew increasing media attention, notably when a list of supposed targets, including asylum centres and the offices of immigration lawyers, was shared on the platform. According to Baker, the list originated on Southport Wake Up, then spread across other far-right groups. Logically, a tech company that fights disinformation, has stated that channels linked to extreme-right groups including the UK-based Patriotic Alternative and other proscribed international terror organisations participated in promotion and planning during the unrest.

“The fact that a list compiled by and first published in a relatively small Telegram channel subsequently spread nationwide shows how easily far-right individuals can spread fear and possibly mobilise violence by using social media,” Joe Mulhall of the anti-racism group Hope Not Hate said in a statement, sent via email, in response to questions for this article.

Although UK-based far-right social media networks and prominent figures such as Farage and Yaxley-Lennon were important vectors for the rhetoric that formed a backdrop to the riots, foreign actors also seized upon events in the UK. Much has been made by some government figures of the possible covert involvement of hostile states in the dissemination of fake news during the violence, but the evidence is meagre. However, one of the most powerful amplifiers of such content — both directly and indirectly — is the owner of X himself.

After being shut out of Twitter five years earlier, Yaxley-Lennon was allowed back in November 2023 as part of a sweeping reinstatement of banned accounts presided over by Musk. In his time away, Yaxley-Lennon had built a significant presence on Telegram, with more than 100,000 subscribers. Returning to X, where he now has close to a million followers, enabled him and others to leverage both communities, cross-posting between platforms and taking advantage of the relative privacy of Telegram to organise at street level.

“The relationship between X and Telegram seems to have changed in that you find a lot of the same people on both now, where previously they would just not have been welcome on Twitter,” said Baker. “I have been very shocked at the level of extreme discourse which has been allowed to propagate on X. It looks more and more like Telegram every day.”

Jones notes that the online ecosystem that helped to propagate the UK riots has been evolving for some time and has now developed into a perfect medium for far-right propaganda. “The whole point of a successful disinformation campaign is to make it cross-platform,” he said. “If, after seeing something on X, people find it on Facebook too, that creates the illusion of credibility.”

Soon after his acquisition of Twitter, Elon Musk dismantled the company’s trust and safety council and replaced the previous blue tick verification system for public figures and organisations with a much-criticised version available to account holders willing to subscribe to the X Premium service.

Musk’s political positions have also shifted sharply to the right. In the past few months he has posted apocalyptic, factually inaccurate and conspiracist content to his near-200 million following, particularly in relation to matters concerning free speech, immigration and “western civilisation”.

During the riots he posted that civil war was “inevitable” in the UK and shared content including a false story posted by Ashlea Simon from Britain First about “detainment camps” for rioters being set up on the Falkland Islands. In addition to criticising the UK police and comparing the country to the Soviet Union for arresting individuals suspected of inflammatory social media activity, Musk replied to posts by Europe Invasion and Yaxley-Lennon, massively boosting their reach.

“He’s not only changed the nature of the platform to be more dangerous,” said Jones. “Personally, he is involved in fanning the flames of xenophobia and hatred, which was important in sustaining the violence we’ve seen in the UK.”

The riots subsided following a large-scale turnout of community organisations, grassroots counter-protesters and anti-fascist activists across the country, but the conspiracy theories keep coming.

False information has spread online that the list of targets was planted by the government in order to embarrass the far right and that mass anti-racist gatherings seen in cities including Brighton, London and Liverpool were part of the same plot. The idea that the alleged Southport attacker is an undocumented Muslim immigrant also persists, along with the belief that the identity released by police is part of an elaborate cover-up.

Establishment politicians from former home secretary Priti Patel to Farage have also not stopped complaining about “two-tier policing”, a conspiracy theory that rightwing, predominantly white people are treated more harshly than other groups. While the violence that swept across the UK may now be over, experts believe that the unchecked spread of such rhetoric and the continued existence of far-right social media networks pose a significant future threat.

“I would be very surprised if we didn’t see the organised right try again to recoup some of that momentum,” said Baker. “This was the biggest moment they’ve had in decades.”

Newsletter

Newsletter