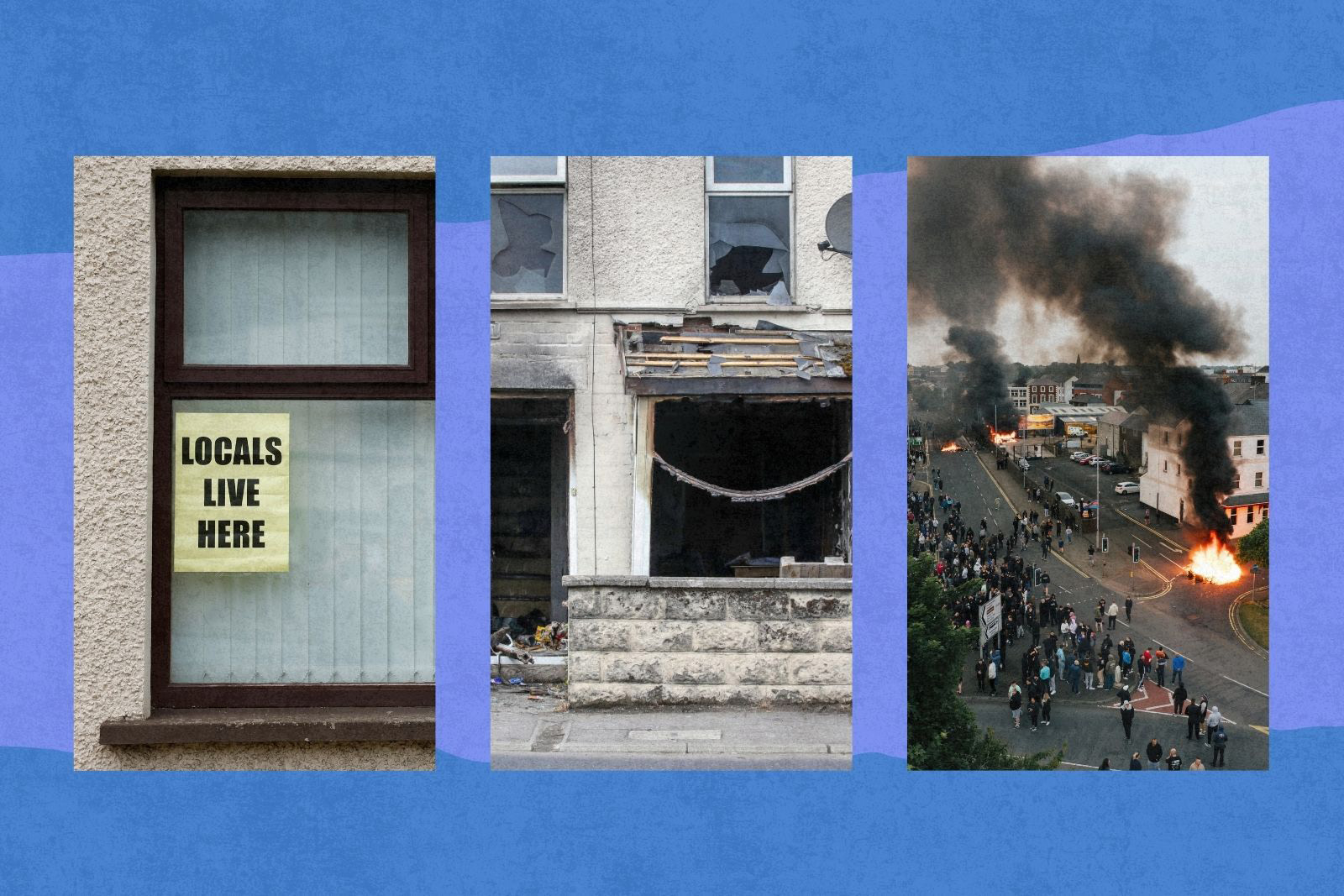

Overseas X accounts that fuelled 2024 riots still targeting UK Muslims

Accounts such as US-based End Wokeness were identified by MPs as key players in the spread of disinformation ahead of last summer’s violence

An anonymous US-based X account whose disinformation about the Southport attacker was cited by MPs as a key contributor to last year’s riots appears to have faced no penalty — and went on to initiate a hate campaign against Rotherham’s first female Muslim mayor almost a year later.

It is one of a pair of overseas accounts cited by one disinformation expert as having spread misleading claims in the wake of the Southport stabbings that have since targeted prominent British Muslims, eliciting apparent calls for Islamophobic violence from followers.

Hyphen also found that X has failed to remove a post from a third account that appears to incite people to “rise up” and “shoot” Keir Starmer — despite the social media company’s director for global government affairs, Wifredo Fernandez, telling MPs in February that his team would look into it. The chair of the science, innovation and technology committee (SITC), which Fernandez addressed, told Hyphen that X’s inaction was “particularly concerning” and that Ofcom, the UK online safety regulator, should intervene if necessary.

Our findings have sparked a warning from former equalities minister Anneliese Dodds, who resigned from the government in February, that there are “major regulatory gaps” in how Britain tackles online harm.

“I’m very concerned to see that these two accounts which promoted disinformation last July are still in operation and actively targeting people in Britain,” said the Labour MP. “Their hate-filled rhetoric fuelled the flames of hatred during last year’s riots, and is now spreading racist lies about British Muslim politicians and other public figures.”

“With every incident, we learn.” These were Fernandez’s words to MPs on the SITC in February as they grilled him and fellow social media executives on what they’d do differently if the riots happened again.

But experts have said the ongoing targeting of Muslim public figures shows X has not done enough to tackle the spread of Islamophobic disinformation and hate since last year’s riots.

Rukhsana Ismail, a charity chief executive, was sent Islamophobic abuse and threats within days of taking office as the mayor of Rotherham in May 2025, much of it from thousands of miles away.

“Islamophobia seems to be increasing and its amplification online is getting worse,” said Marc Owen Jones, an associate professor at Northwestern University in Qatar.

“It’s only a matter of time before we see another disinformation-driven incident leading to some level of civil unrest, perhaps even worse than what we saw in 2024.”

Urdu-language TV channel Dunya News interviewed Ismail after she was sworn in as mayor and posted its full report on YouTube on 19 May. An edited video, cut to show Ismail only speaking in Urdu, was widely shared on social media — mostly X — the next day, sparking false claims she had spoken no English during her inauguration, and could not speak it at all.

Analysis by Hyphen, verified by Jones, suggests X account End Wokeness — which has 3.8 million followers — may have been first to share this decontextualised clip in the early hours of 20 May. Lies about Ismail spread across three continents, gaining the most traction in the US, followed by the UK and India, analysis by Jones for Hyphen suggests.

After Cardiff-born Axel Rudakubana killed three children at a Taylor Swift-themed dance class in Southport on 29 July 2024, End Wokeness was among the accounts that helped spread fake news about his identity.

It shared bogus claims on X that the “suspect is a ‘asylum-seeker’ named Ali Al Shakati”, citing the pseudo-news account Channel3 Now.

The SITC this month published a report on how misinformation and “harmful algorithms” had helped fuel last summer’s riots, with a timeline that identifies End Wokeness’s post at 7.25pm on 29 July as a key event.

Jones also pointed to a second account, Radio Genoa, which claims to be run from Italy and has built up 1.4 million followers on X by publishing a steady stream of anti-migrant and anti-Muslim content.

Last summer, its posts on the Southport stabbings and riots racked up at least 11 million views. Among them were a widely-viewed clip of the arrest of Southport man Jordan Davies — who was later found to be on his way to join a mob attacking the mosque — with the caption: “He doesn’t look British.” It was viewed more than half a million times. Merseyside police have confirmed that Davies is British. Radio Genoa also questioned the force’s statement that Rudakubana was Welsh.

Since then, the page has targeted prominent British Muslims including Ofsted leader Sir Hamid Patel and Brighton and Hove city council’s mayor Mohammed Asaduzzaman, saying in a post viewed 1.3 million times that the latter could “hardly speak English”.

Replies to these posts included multiple apparent calls for violence against Muslims.

Andy George, president of the National Black Police Association, called for tougher legislation against online disinformation. “We’ve already seen how false narratives, especially those targeting British Muslims, can escalate into real-world violence and civil unrest,” he said.

In February, Fernandez told MPs that X had introduced “community notes” — user-generated fact-check boxes that appear under contentious posts — to help tackle misinformation, and that they had provided “useful context” amid the riots. The notes can stop posts being monetised, he said.

But neither End Wokeness’s post about Ismail nor any of the posts from Radio Genoa that we’ve referred to have been tagged with any community notes and companies continue to advertise under End Wokeness’s content.

The platform has shown a similar reticence when it comes to attacks on more senior politicians.

MPs quizzed Fernandez at the same event about a post from an X account that stated: “Why don’t we rise up and openly shoot [Starmer] as he needs to step down or people of the uk will force him too or if not I WILL.”

Fernandez promised: “I will have our teams review that.” But five months later, the post has not been taken down and no community notes have been attached to it.

Dame Chi Onwurah, who chairs the SITC, told Hyphen: “It’s particularly concerning that X has not acted to remove a post we raised with them in February, which calls for violence and makes a direct threat to the prime minister. X assured us in our evidence session that the matter would be reviewed. We hope Ofcom are prepared to intervene if X do not take appropriate action.”

The UK’s Online Safety Act, introduced in October 2023, has given tech companies a duty both to take down illegal content — such as religiously aggravated public order offences and posts inciting violence — and to stop it from appearing in the first place. It gives Ofcom the power to fine platforms up to £18m or 10% of their qualifying global revenue, whichever is greater.

But Onwurah told Hyphen her committee had found the legislation was “woefully inadequate”. “It doesn’t include measures to counter the algorithmic amplification of legal but harmful content such as misinformation,” she said. “This can be spread by bad actors, and stoke social division and drive real world violence, like we saw on British streets last summer.”

Earlier in July, Labour peer Maggie Jones — a parliamentary under-secretary for online safety — warned at a panel event that the government would “go further when and if that is needed” if tech companies did not comply with the new rules.

Any move to fine platforms or beef up Ofcom’s powers could set Starmer’s government on a collision course with Donald Trump’s administration, however. US state department officials have reportedly challenged Ofcom over the impact of online safety laws and vice president JD Vance has criticised their impact on American technology companies.

End Wokeness, Radio Genoa, the Department of Science, Innovation and Technology and X were all approached for comment.

Ofcom said its online safety role was to ensure sites have “appropriate systems and processes” to comply with the legislation, not tell them “which posts to take down”. But the regulator insisted it was “closely scrutinising” whether tech companies were complying with their duties by taking down illegal content that was flagged.

Newsletter

Newsletter