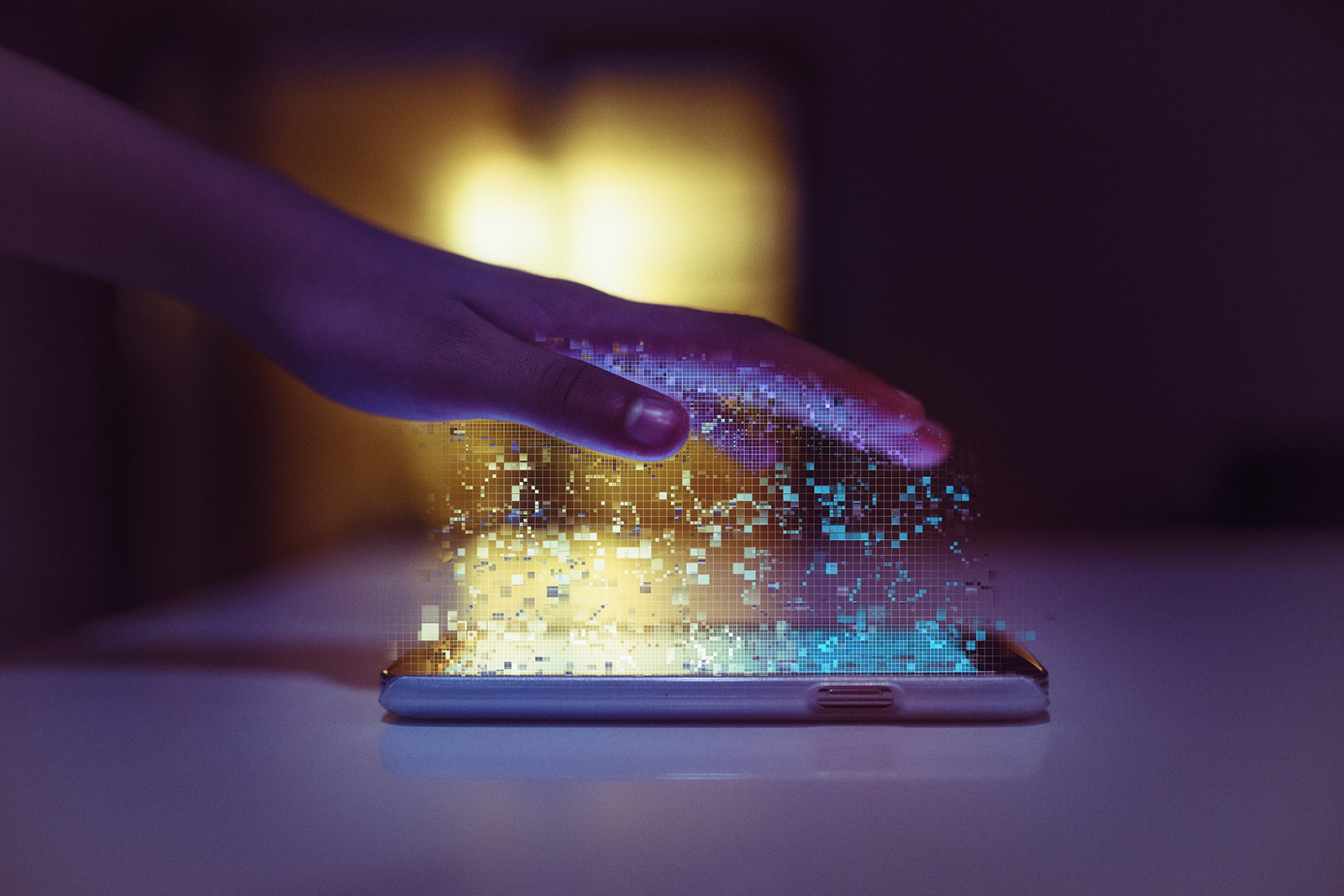

Sahdya Darr Q&A: ‘Human rights should be at the heart of technology and data policy’

The social justice advocate discusses the importance of knowing your digital rights

Sahdya Darr, 39, is an expert in digital rights and civil liberties. She has worked as an observer in Palestine, researched aid and development in Afghanistan and campaigned with the Quakers against the arms trade.

Since 2020, she has turned her attention closer to home, examining how technology, data and artificial intelligence can infringe the rights of minority communities for the Birmingham-based campaign group Data, Tech & Black Communities.

Now living in Solihull in the West Midlands, Darr spoke about her work as a community organiser at Muslim Tech Fest in London on 1 June.

Ahead of the conference, she spoke to Hyphen about the importance of digital literacy.

This interview has been edited for length and clarity.

What does Data, Tech & Black Communities do?

We look at the impact of EdTech — educational apps and technologies within schools that range from storing contact details, monitoring students’ academic progress and remote learning software to fingerprint collection — on Black children. Just as some organisations are making young people aware of their rights regarding policies such as stop and search, we’re making them aware of their digital rights.

I think tech is sometimes sold as a solution to inequality, discrimination and oppression. We already know that the children most likely to be excluded from the education system are Black, so we’re interested to know what impact the data collection by apps is having on those children.

What is the general state of digital literacy in the UK?

I think there’s a growing awareness of digital rights, but it’s happening slowly. The UN and other organisations say digital rights are human rights, but people don’t know enough about them or how to exercise them. Parents, for example, don’t often go to a school and say, “So, you’re using this technology. Can you please tell me where the data on my child is being stored and how long it’s being stored for?”

Do you think Muslim communities on the whole have good digital literacy?

In 2021, I spoke to Waqas Tufail, a reader in criminology at Leeds Beckett University, who works on data and Islamophobia. I asked him whether he thought people in our communities knew about freedom of information, subject access requests (SARs) and how their data is being collected. He said he didn’t think so.

Subject access and freedom of information requests are tools more of us Muslims should be using. SARs are a tool to empower individuals to access and control personal data held by organisations, particularly given Islamophobia in the workplace where the information provided could be used as evidence for tribunal proceedings and grievances.

My work is not just about raising the alarm about an issue or how people’s data rights are being infringed or violated. I’m trying to build a collective of Muslims who are concerned with data justice.

What are your concerns about tech and data policies?

A concern I have is the power and influence large tech companies are being allowed to exert in this policy area. It’s self-serving and completely disregards human rights for profit. Human rights should be at the heart of technology and data policy.

For example, the global Campaign to Stop Killer Robots is campaigning for the regulation of AI in warfare. We know that AI is being used in Ukraine and Gaza and yet the campaign was silent and inactive on both. All of this led to several resignations, including my own. [The campaign group later released a statement expressing concern about the use of automated targeting systems in Gaza, and in March 2022 they condemned Russia’s use of AI-governed munitions in Ukraine.] If we are to challenge big tech’s power and influence, then we need to show solidarity.

How is the use of data collection being challenged by community groups?

I think it’s important to amplify the work of those rights groups and those who haven’t just sat by, such as the Racial Justice Network and Yorkshire Resists who launched their Stop the Scan campaign against the use of biometrics by the police in stop and search.

It’s not only about understanding your rights. We also need to remember as communities that we have power to resist these things and to stop their use.

Newsletter

Newsletter